Social Media

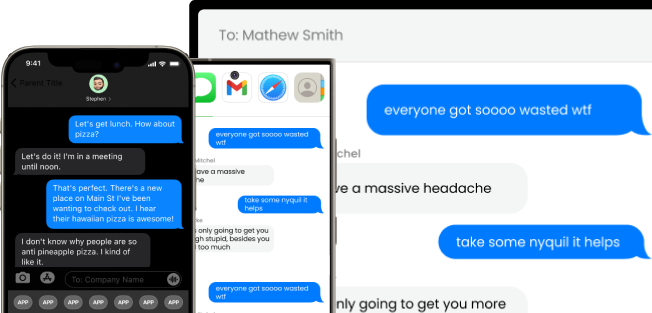

Learn how to monitor social platforms and protect your family from online risks and distractions..

Insights, tips, and strategies for seamless digital tracking and safety

Discover the newest blogs on phone tracking and monitoring strategies and trends.

Learn how to monitor social platforms and protect your family from online risks and distractions..

XNSPY is the only phone tracking app that gives you total control over your phone.

Explore XNSPY

Master essential tech and monitoring skills with step-by-step guides and practical advice..

Get XNSPY and see what they do on their phones without needing physical access.

Socials

Facebook

X

Pinterest

Youtube

Instagram